“Polling is broken.”

That was the heading on an Australian newspaper article by pollster John Utting, a few days after the Australian election that everyone so famously failed to predict.

“Should anyone trust a political poll ever again?”

That was a post-election headline in The Sydney Morning Herald.

“Australian election: How did the polls get it so wrong?”

This was the headline on a news article last Monday on this website, written by this columnist.

If pollsters and respected pundits (and me) are all saying it, surely we can just blame the pollsters? Yes?

Statistician Peter Ellis thinks not. He thinks polling can be improved, but he reckons it is still, on average, feeding quite good information to the world. What needs improvement are the way people look at polls, and the things they do with them. The pundit class, he says, is overreacting to this simple fact: Polling is less accurate than most people imagine.

“I think it’s fair to say that punditry is broken,” says Ellis.

Now’s the pundit class’s chance to learn from experts.

Accept uncertainty

Ellis is now a director of The Nous Group, an Australian and UK consultancy. As a freelance consultant, his clients included the World Bank. He also runs Free Range Statistics, one of the world’s better statistics blogs.

Looking at the analysis of the polls last week, my judgement was that Ellis has the most sophisticated analysis out there. Importantly, he also has runs on the board. Here he is, tweeting on 13 May, five days before the Australian federal election:

“Saturday is too uncertain to predict. ALP remain favourites but only just; hung parliament requiring small parties or independents for either side is a real possibility; as is even a straight Lib/Nat Coalition win.”

#AusVotes2019 Saturday is too uncertain to predict. ALP remain favourites but only just; hung parliament requiring small parties or independents for either side is a real possibility; as is even a straight Lib/Nat Coalition win. https://t.co/H6rdS1V7AJ with #rstats and @mcmcstat. pic.twitter.com/1BWjAltoIi

— Peter Ellis (@ellis2013nz) May 13, 2019

That tweet was right – not because it showed certainty that the Coalition would win, but because it expressed Ellis’s substantial lack of certainty about a Labor win.

It’s important to accept the uncertainty that’s there. And while most people know that polls have a ‘margin of error’, that’s simply a technical term used for just one source of polling error. The world has many more.

Labor was tipped to get between 51 and 52% of the vote. They ‘ll end up with about 49%; the polling was generally off by about 3% of the total. That’s a poor result, but not disastrous – although as we’ll see, it did have one suspicious aspect.

How Ellis predicted

Ellis didn’t say “Labor will win”. He said that was the likeliest of a range of possible results, from a Labor win, through the various possible no-majority results, to a Coalition majority. And he assigned probabilities to those results. His 13 May pre-election analysis gave the Coalition a one in six chance.

Ellis assigned that Coalition win probability in part because he adjusted the different pollsters’ results for their recent propensities to favour Labor. Those propensities – “house effects”, as he calls them – do not exist because pollsters all want Labor to win, or to lose. Rather, they exist because Labor voters are different to Liberal voters in ways pollsters don’t understand or don’t account for well enough.

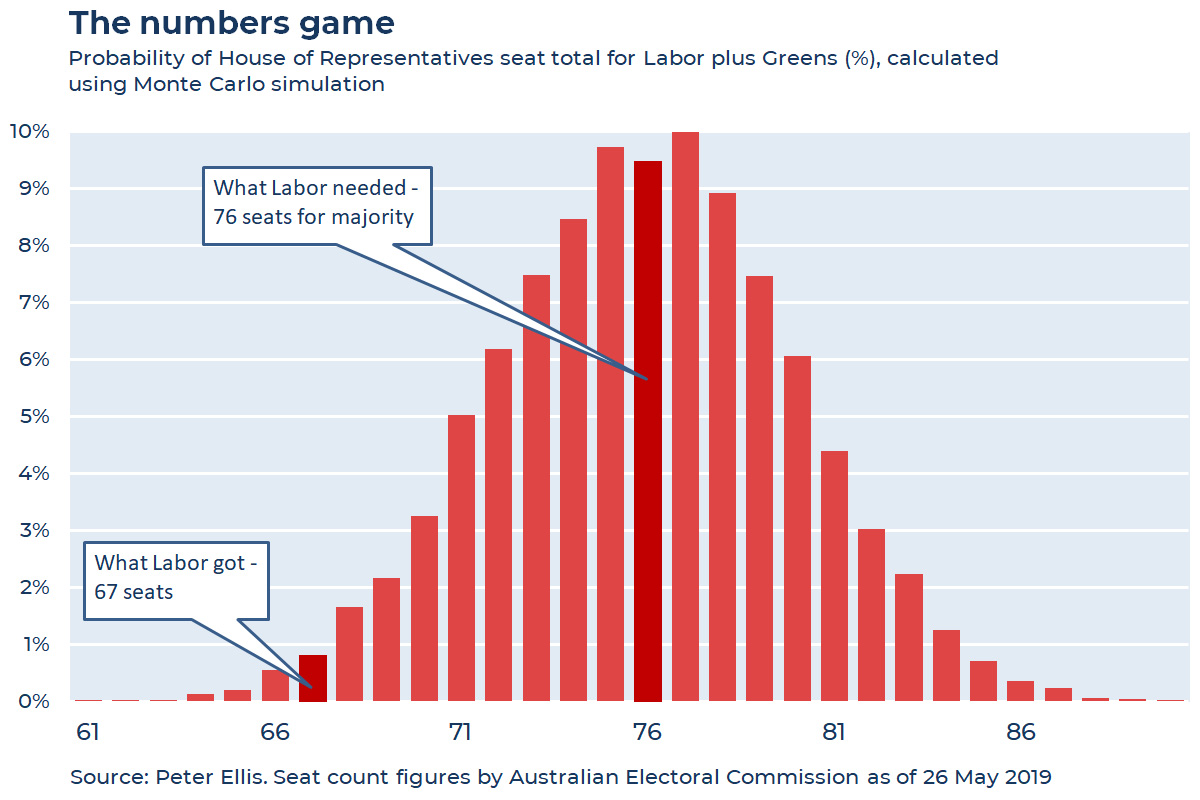

Having created what he believed to be the best available swing percentage, Ellis then applied different swings within that probability range, over and over, to each of the 151 lower house seats. This is called a Monte Carlo simulation. The same technique helped US statistician Nate Silver to assign a 29% probability of Trump winning the 2016 US election – rather more than most other people gave him.

Ellis, like Silver in the US, ended up with a distribution of probabilities for each party. Here’s Labor’s distribution:

Now to most people, thinking like this feels wrong. What’s the point of saying Trump had a 29% chance, or Morrison a 16% chance? Polls are supposed to predict a winner – and if they can’t, what the heck are they good for?

At that point, statisticians need to say: sorry, but the world doesn’t work like that. Uncertainty exists, and so you have to express it somehow.

What pundits need to know

If Ellis could assemble the nation’s political pundits in a room, here’s what he’d tell them:

- Polling needs to improve

“Pollsters definitely need to have a good, hard look at their techniques,” Ellis says. They need to improve how they calculate their results, how they adjust for the difficulties of getting a good sample of voters, and more.

One possibility, Ellis says, is that pollsters are not weighting their results to adjust for education. In Australia, as in the US, voters with lower education seem harder to contact. Underweighting for them hurt US pollsters’ results in 2016.

That may be the case here, too. The Australian National University’s Ben Phillips last week calculated that lower education was the single known factor that most predicted a voting area would swing to the Coalition.

The pollsters are also pretty clearly being influenced by each other, which should also stop. Evidence of “herding” between pollsters was pretty obvious in the pre-election polls: the last 17 poll results before the election all had Labor on 51 or 52%, a result that’s extremely hard to obtain by chance. This herding happens more than you might think.

- Polling must be more open

Pollsters also need to be more transparent, says Ellis. For example, he says, “good practice is that they publish their methods and [explain] how they do their weighting.” Australian pollsters prefer to publish just the results they want people to see, meaning they never get constructive suggestions about how to improve.

The lack of transparency also leads to ill-informed commentary about what pollsters actually do, including the outdated criticism that they only phone landlines.

I have zero belief in polls. Nobody I know has a landline. Nobody I know has ever been polled. They are a media invention to influence elections and are a bane on democracy. Shorten 'popularity' is a media invention to suit a narrative.

— James M. (@dotrat) May 18, 2019

And pollsters now have few places to hide. In this election, outside analysts have brought new professional expertise to the number-crunching – Ellis, Kevin Bonham, Peter Brent, Nathan Ruser, Mark the Ballot and more. As Kevin Bonham put it on his site: “The pollsters are partly responsible for the ignorance that breeds in a vacuum, because they’ve given the public the mushroom treatment for so long.”

- Communicate polls better

Political writers and commentators need to take the lead in educating the public about polls. If you’re going to report them, learn more about them. Accept and explain that polls are uncertain, even if that’s hard for people to understand.

Recognise that pollsters have a vested interest in talking up their own accuracy, and be sceptical. When the polls say, “option A is more likely”, explain that this is not a prediction that option A will happen. Don’t just provide the brightest and simplest version of your polls’ story, even if it’s an important part of your newspaper’s brand (hello Newspoll).

Taking a good hard look

The pundit class has been busy this week saying that Labor has to take a good hard look at itself, confess to its mistakes and start doing better. The pundits themselves need to do the same.

Late last week Tory Maguire, national editor of the Sydney Morning Herald and The Age, announced that her papers might do just that. “If we do decide to continue publishing polls in the future, perhaps we should reconsider the way we report them,” she said.

Peter Ellis, and quite a few other professional statisticians, will be nodding in agreement.